Emoji-fying LLMs: Visualizing AI’s Text Understanding

We talk to AI as if it understands us perfectly. We ask questions and give commands, and it amazes us as it replies, generates code, and holds surprisingly coherent conversations.

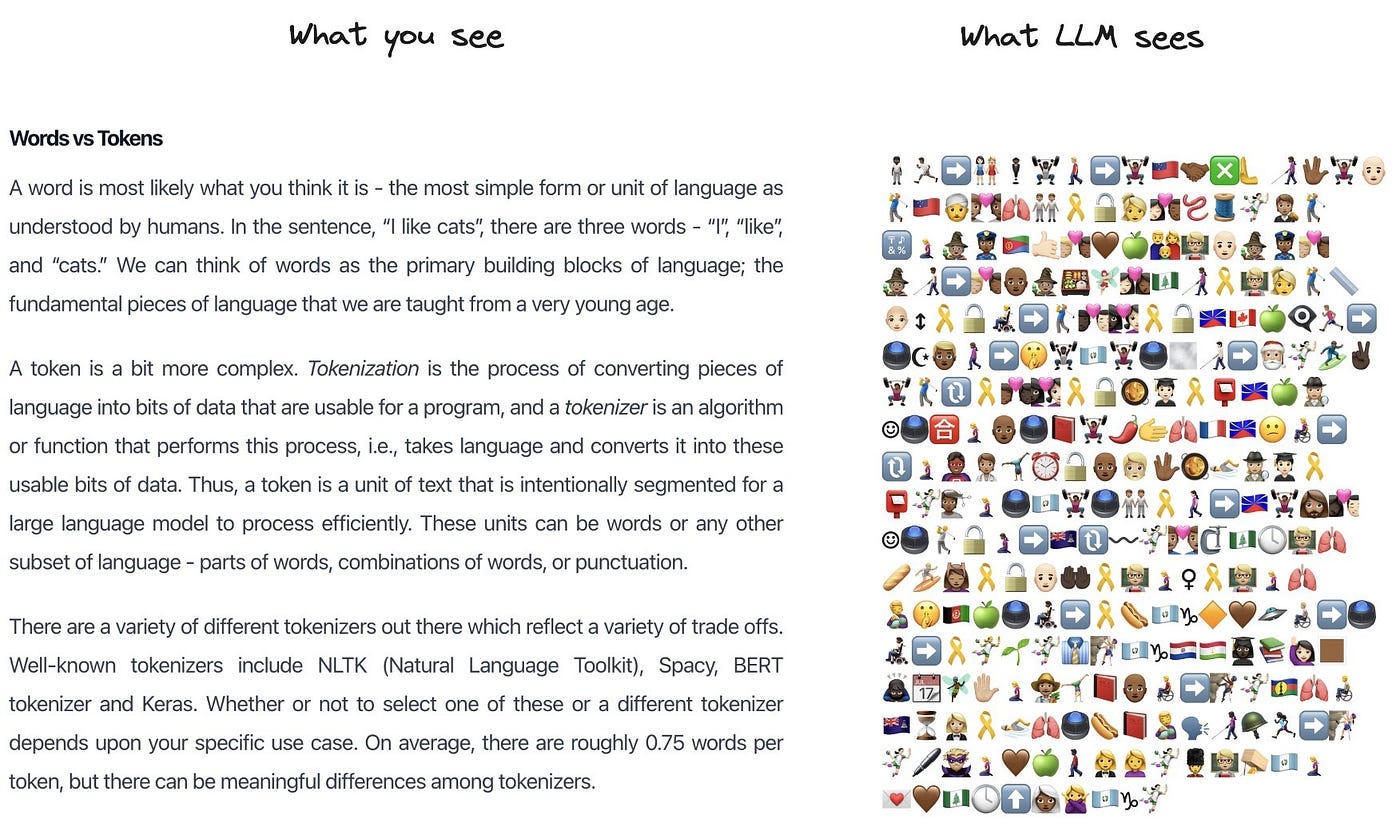

We talk to AI as if it understands us perfectly. We ask questions and give commands, and it amazes us as it replies, generates code, and holds surprisingly coherent conversations. But what’s really happening behind those lines of text? What’s AI seeing when we type “Hello, world!”? The truth is that AI’s native language isn’t English or any human language. It’s a realm of numbers — a vast numerical representation system that encodes meaning. It’s through this lens of numbers that AI interprets our words and generates its responses.

But let’s face it, staring at a jumble of digits isn’t exactly illuminating. So, we’re going to try something a bit more… expressive. We’re going to translate those numbers into emojis! 😄

The Tokenization Process: Words vs. Tokens

Before we analyse our emoji representation, let’s clarify what tokenization actually means. Words are what we humans use every day — like “cat,” “love,” or “supercalifragilisticexpialidocious” (yes, that’s a real word, Mary Poppins fans!). But for the LLMs, words are a bit too… wordy. They prefer tokens. Just as we use letters to form words and words to build sentences, AI uses tokens as the fundamental units of language. But here’s the twist — a token isn’t always a whole word. Sometimes a token is a whole word, sometimes it’s part of a word, and sometimes it’s even a punctuation mark. Tokens are the bite-sized pieces of language that LLMs can digest. It’s like taking a sentence and putting it through a linguistic food processor — what comes out might look a bit strange to us, but it’s delicious data for an AI.

Setting the Stage: Our Emoji Toolkit

To visualize this process, we will use a bit of Python to turn tokens into emojis. We’ll use Python libraries called TikTok and Emojis to break down text into tokens and then map them to emojis. Here’s what we need:

!pip install tiktoken

!pip install emoji

import tiktoken

import emoji

import random

# Initialize the GPT-4 Tokenizer

enc = tiktoken.encoding_for_model("gpt-4")

print(enc.n_vocab) # number of tokens in total

# Initialize the emojis

emojis = list(emoji.EMOJI_DATA.keys())

random.seed(42) # For reproducibility

random.shuffle(emojis)

print(len(emoji.EMOJI_DATA)) # number of possible emojisThis code sets up our tokenizer (we’re using GPT-4’s for this example) and prepares our emoji arsenal.

The Magic Translator: Tokens to Emojis

Now, let’s create our token-to-emoji translator:

def text_to_tokens(text, max_per_row=10):

ids = enc.encode(text)

unique_tokens = set(ids)

# map all tokens we see to a unique emoji

id_to_emoji = {id: emoji for emoji, id in zip(emojis, unique_tokens)}

# do the translatation

lines = []

for i in range(0, len(ids), max_per_row):

lines.append(''.join([id_to_emoji[id] for id in ids[i:i+max_per_row]]))

return '\n'.join(lines)This function takes our text, tokenizes it, and assigns a unique emoji to each token (using modulo to handle cases where the number of tokens exceeds the number of emojis). It’s like giving each piece of the language puzzle its own colorful sticker!

Now, let’s try it out with a fun example:

text = """

ChatGPT's smart, DALL-E's artsy,

Midjourney's quite the pro,

But when they tokenize our words,

It's emoji-time, yo!

"""

print(text_to_tokens(text, max_per_row=10))What you’ll see is something like this (note: your emojis may vary due to randomization):

🇦🇬💂🏼♂🧗🏼♀🙎🏽♀🙎🏿♂️🏃🏻♀➡👨🏽🦽➡🖖🏻🦸🏽👩🦰🙎🏿♂️🏜️🧑🏾⚖️🏄👍🏿

🧎🏼♂➡️👩💻🙎🏿♂️🏂🇸🇴👩🏽❤️👩🏾🏄💫👩🏾🦼➡️🔁🥷🏻🗑🇦🇺🏄🏃🏾♂️

🙎🏿♂️💆🏻♂👩🏾❤️👩🏿👨🏽🦽➡🤸🏼♀️👒

Each emoji represents a token. Our simple poem has been transformed into a colorful array of symbols that an LLM would process.

The Method to the Madness

You might be wondering, “Why go through all this trouble?” Well, tokenization serves several important purposes:

Efficiency: It’s easier for an AI to process numbers than raw text.

Vocabulary Management: By breaking words into subwords, the AI can handle a wider range of vocabulary with fewer unique tokens.

Language Agnosticism: This method works across different languages and even emojis themselves!

The Quirks of Tokenization

Now, let’s look at some quirky examples to see how tokenization can sometimes surprise us:

quirky_texts = [

"lol",

"supercalifragilisticexpialidocious",

"🤣😂🙃",

"https://www.example.com",

"The quick brown fox jumps over the lazy dog."

]

for text in quirky_texts:

print(f"Original: {text}")

print(f"Tokenized: {text_to_tokens(text)}\n")You might notice:

Short, common expressions like “lol” might be a single token (one emoji).

Long words get broken into multiple tokens.

Emojis themselves get tokenized!

URLs often get split in interesting ways.

Common phrases might have surprising token boundaries.

Original: lol

Tokenized: 🧎♀

Original: supercalifragilisticexpialidocious

Tokenized: 🤾🏻♀️🧑🦽🈴🍏🧎♀📏🧑🦼➡️🧑🏾🦼➡️🤙🏻✌🏿

🙍♀️

Original: 🤣😂🙃

Tokenized: ✌🏿📏🧎♀🍏🤾🏻♀️✌🏿🙍♀️🧑🏾🦼➡️

Original: https://www.example.com

Tokenized: 🍏📏🤾🏻♀️🧎♀🧑🏾🦼➡️

Original: The quick brown fox jumps over the lazy dog.

Tokenized: 🙍♀️🈴🍏🧑🦼➡️🧎♀✌🏿🤙🏻🧑🏾🦼➡️🤾🏻♀️📏Peeking Behind the Emoji Curtain

While our emoji representation gives us a colorful way to visualize tokenization, let’s take a moment to see what the LLM actually “sees”. We can do this by using the enc.encode(text) function:

enc.encode(text)Output:

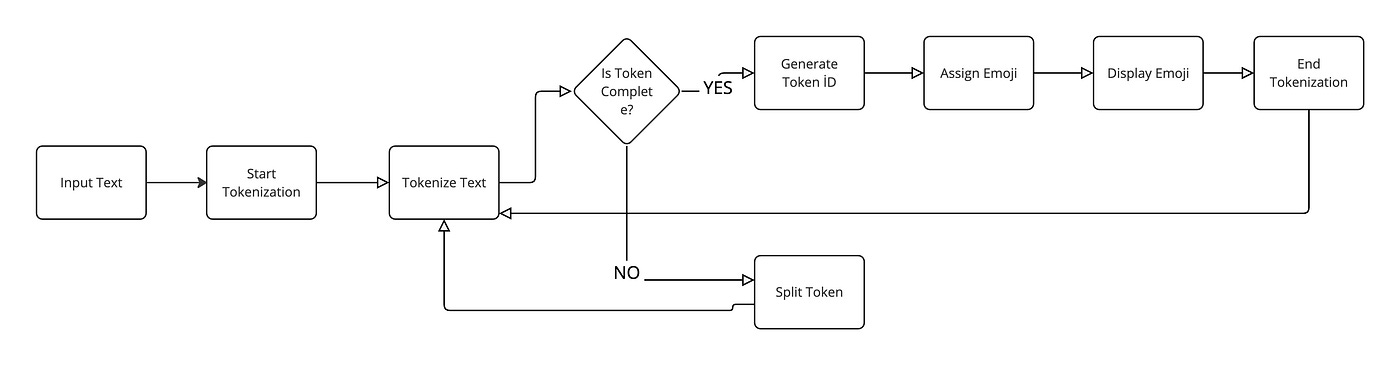

[791, 4062, 14198, 39935, 35308, 927, 279, 16053, 5679, 13]And finally, here is a visual flowchart to represent each step in our code.

The Takeaway

Next time you’re chatting with an AI, remember that it’s seeing your words as a string of tokens — each one a unique symbol in its vast vocabulary. It’s like you’re speaking in a secret code, and the AI has the decoder key!

Understanding tokenization helps us appreciate the complexity of language processing and the clever ways AI manages to understand and generate human-like text. It’s a reminder that while AI can seem almost magical, there’s a method to the madness — a method that sometimes looks like an emoji party! 🎉🧠💻

So, the next time an AI gives you a slightly odd response, have some empathy. Your simple question might look like a carnival of emojis to it! 🎭🎪🌟

Link to Colab code — https://colab.research.google.com/drive/1DvtiTbaNN0zVClEORZxRhxhfz3KBpuMW?usp=sharing

Inspiration for the article came from Andrej Karpathy — check out his tweet https://x.com/karpathy/status/1816637781659254908